Gaussian Splatting and the Infrastructure for Spatial Computing

Michael Rubloff on Gaussian splatting and where the opportunities are for founders building spatial infrastructure

Michael is the founder of Radiance Fields, a consulting firm specialising in 3D reconstruction technology. He built his expertise and online presence in radiance fields before the technology hit mainstream adoption, positioning him at the forefront of this emerging space. Through his consulting work with corporations exploring 3D applications and his industry-recognised content, Michael offers both technical depth and market perspective on how radiance fields and Gaussian splatting are reshaping industries from e-commerce to construction.

Every digital product today pretends reality is flat.

For 40 years, we've forced the complexity of the 3D world onto rectangular screens. This worked well enough for film and web content, but it created two structural gaps that are now barriers to progress:

Physics Gap: Traditional 2D tools and manual 3D modelling cannot accurately capture the physical behaviour of light and materials in the real world.

Scale Gap: Traditional 3D pipelines are too slow and labour-intensive to digitise the physical world at the speed and fidelity required.

If computing is going truly spatial - if AI, robots, and AR are to operate convincingly in physical environments - digital objects must behave like real ones.

The industry's historic solution - creation (modelling scenes by hand in tools like Blender and Unreal) - simply does not scale.

The revolution lies in switching focus from manual creation to scalable capture.

The technical breakthrough is a family of AI techniques called Radiance Fields, with Gaussian Splatting (GS) emerging as the most practical implementation. GS can reconstruct photorealistic 3D from ordinary photos or video.

Gaussian splatting excels at capturing large, expansive spaces without sacrificing smaller details.

Gaussian Splatting is the first 3D technology to simultaneously deliver the necessary speed and fidelity to bridge the digital and physical divide. It transforms the capture of real-world physics into a scalable, accessible process.

At FOV Ventures, this capability is the foundation of our investment thesis: spatial computing requires solving content creation at scale first. We believe this technology creates the essential, missing infrastructure layer.

We spoke with Michael Rubloff of RadianceFields.com to get a view on this rapidly accelerating space and what is now suddenly possible.

Why This Matters Now: The Shift to Real-Time Reality

The entire value proposition of Radiance Fields lies in their technical depth - they bypass traditional 3D construction entirely.

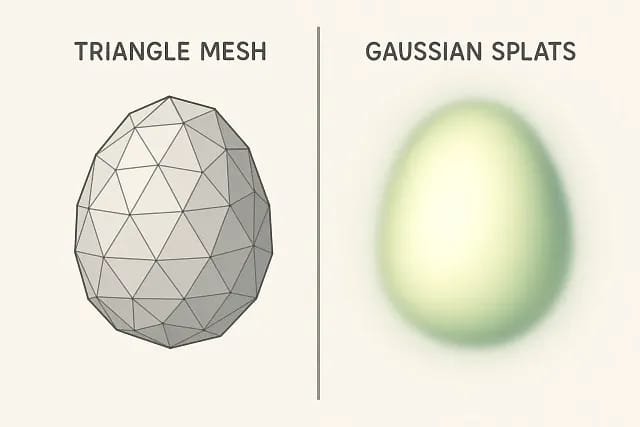

Instead of creating geometry - the manual, low-fidelity process of modelling with polygons - these AI methods learn how light travels through space from multiple input images. This captures not just shape, but the physical behaviour of light, ensuring material fidelity that manual methods could never match.

Polygons vs Splats: two ways of building a 3D world

While this concept emerged years ago, the initial implementations, like Neural Radiance Fields (NeRFs), were computationally intensive. They delivered photorealism but required vast compute - often minutes to render a single frame - making them impractical for any real-time application. However, in 2022, that changed with the publishing of NVIDIA’s Instant NGP, dropping reconstruction time and VRAM requirements.

The critical leap occurred with Gaussian Splatting (GS), which won best paper at SIGGRAPH in 2023. The paper was published in April that year and for months, nobody reacted. Then it won at SIGGRAPH and the response was immediate - companies like Niantic's Scanniverse pivoted overnight, redirecting all resources toward Gaussian splatting.

While NeRFs still produce better visual quality in many cases, they can't be distributed easily across devices or rendered in real-time on standard hardware (for now). Gaussian splatting achieves the necessary compromise by representing scenes as millions of explicit, optimised 3D points rather than an implicit neural network. This allows photorealistic data to be efficiently rasterised on standard GPUs at real-time speeds (100+ FPS), transforming a research curiosity into something commercially viable.

A small, but growing number of engineers Michael speaks with are questioning the long-term viability of triangular meshes as the fundamental representation for 3D. NeRFs have the capabilities of producing photorealistic 3D in files under 1MB - smaller than traditional meshes (although more work here is needed). The only problem is they need powerful GPUs to render. But as GPUs get cheaper and faster, that constraint disappears. Some engineers think neural representations like NeRFs will eventually replace meshes entirely - smaller file sizes, better quality, and they capture how light actually behaves rather than approximating it with geometry.

Gaussian’s practical advantage came from three things converging at the same time:

Improved Camera Accessibility: Now any camera can produce the data for radiance fields, since their input is normal 2D images.

Accessible Compute: Real-time rendering runs on standard GPUs, not expensive datacenters.

Sophisticated AI Models: Can handle messy, real-world conditions (shake, imperfect lighting).

As a result, the technology is rapidly moving from research to production.

Meta's recently launched Hyperscape uses radiance field capture on Quest headsets to create photorealistic virtual replicas of physical spaces, now rolling out multiplayer features so multiple people can explore scanned rooms together.

Hyperscape Capture remains one of the highest profile deployments of Gaussian Splatting for consumer VR, translating a scan made on a standalone headset into a view dependent, radiance field representation that users can revisit later.

Meta have also released SAM 3D Objects for single-image 3D reconstruction, which powers Facebook Marketplace's "View in Room" feature for furniture visualisation. The research is rapidly moving beyond demos into real products being shipped to serve users. When Big Tech invests at this scale across complementary 3D technologies, it validates that the infrastructure layer has crossed from experimental to essential.

Hardware too is evolving to match the software capabilities.

The capture barrier is rapidly falling. Today, smartphone cameras - particularly the iPhone with its depth of field, sharpness, and computational photography - are good enough for quality reconstructions with no specialised equipment meaning people can build photorealistic 3D models with the phone in their pocket.

And elsewhere, consumer hardware is being repurposed in unexpected ways. Action cameras like Insta360, originally designed for extreme sports footage, are now being rigged up in arrays of 30+ units for volumetric capture. Companies are using tools in ways manufacturers never intended, a clear signal that demand is outpacing purpose-built solutions.

XGRIDS arrived as the first cameras designed specifically for Gaussian splatting capture, with built-in SLAM (Simultaneous Localisation and Mapping) for spatial tracking. At $5,000, the Portal sits at prosumer pricing - expensive for consumers but within range of what professionals already spend on high-end mirrorless cameras and lenses.

Michael expects this tier to expand rapidly over the next two years, with truly consumer-grade devices arriving within five years. Major manufacturers like Canon and Sony will likely be forced to release lines that shoot natively in 3D.

The trajectory mirrors photography's evolution:

→ professional equipment shrinks to consumer form factors

→→ costs drop by orders of magnitude

→→→ suddenly everyone creates content that once required specialists.

When capture becomes that accessible, the constraint shifts from "can we create enough 3D content" to "what do we build with all this 3D data".

This shift unlocks immediate, high-value opportunities across multiple verticals.

Where The Opportunities Are

Michael has been tracking this space long enough to separate near-term opportunities from distant possibilities.

His assessment is that startups need to focus on industries with clear, funded problems where better 3D visualisation creates immediate, measurable value rather than chasing consumer applications that may take years to materialise.

Hard services industries offer the clearest path.

Geospatial companies, architecture firms, and construction operations already pay significant money for 3D data through LiDAR scans, photogrammetry, and drone surveys.

Current methods work but they're expensive and time-consuming, which means radiance fields don't have to convince these buyers that 3D data is valuable - they just have to prove they can deliver it faster and cheaper. "Even the visualisation itself is a huge step forward from what they have traditionally worked with," Michael notes. The value proposition is immediate: better spatial data, lower cost per capture, faster turnaround from field work to usable models.

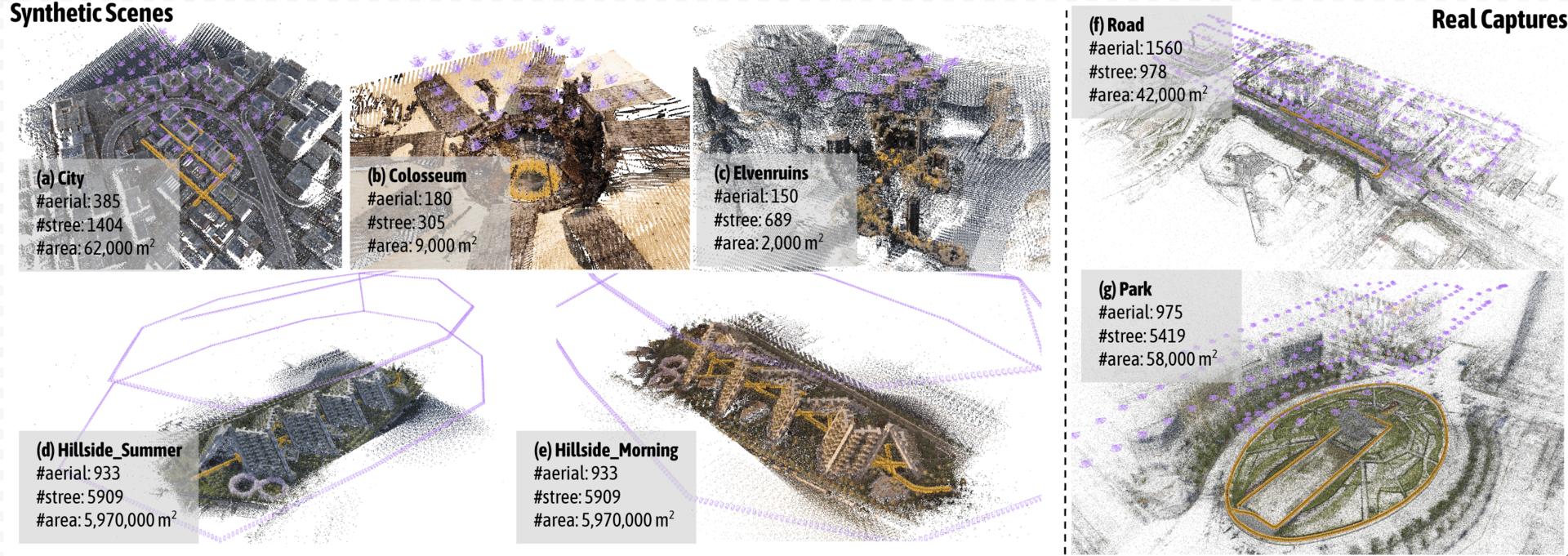

Horizon-GS, tackles the unified reconstruction and rendering for aerial and street views with a new training strategy, overcoming viewpoint discrepancies to generate high-fidelity scenes.

Architecture, engineering, and construction workflows need accurate spatial documentation for planning, change detection, and as-built verification. These industries understand ROI in concrete terms, which makes them attractive beachheads for startups that can navigate longer sales cycles and complex procurement processes.

Education and training applications create repeatable value.

Creating realistic training environments typically requires either expensive physical mockups or months of 3D modelling work by specialised artists.

Radiance fields let you capture a real space once and train people in that exact environment repeatedly, which matters enormously for medical training, industrial safety protocols, and military simulations where the physical environment is critical and mistakes carry real consequences.

The content creation bottleneck has kept many training applications stuck in simplified 3D environments or 2D video instructional content. Radiance fields remove that constraint entirely, enabling organisations to build libraries of real-world scenarios that would be too dangerous, expensive, or logistically complex to recreate physically for every training session.

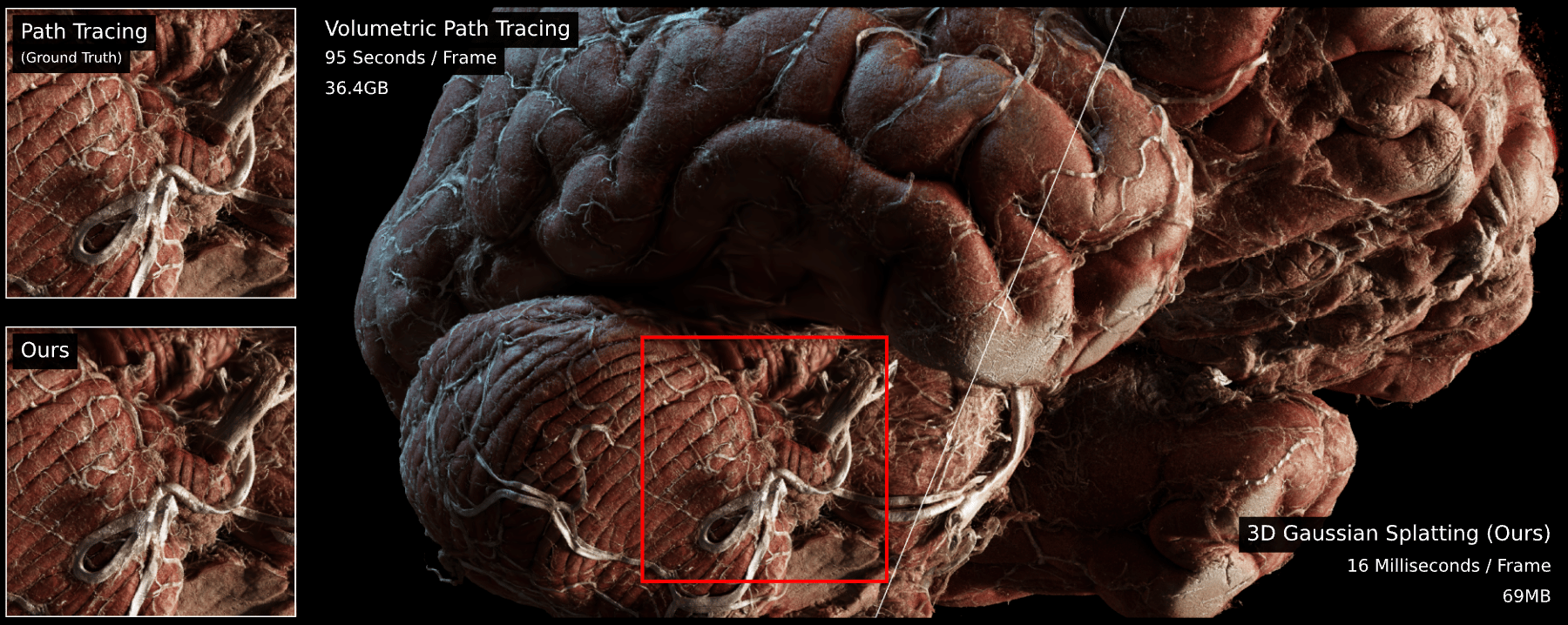

This capability is already being demonstrated in the medical field, as 3D Gaussian Splatting makes cinematic, photorealistic, interactive 3D visualisations of human anatomy accessible on consumer devices, potentially moving complex anatomical study from restricted lecture halls to mobile, personal learning environments.

Application of 3D Gaussian Splatting for Cinematic Anatomy on Consumer Devices

The middleware layer remains mostly unbuilt.

Reconstruction tools exist, viewers exist, but the connective tissue between capture and application is sparse. Major platforms are already adding native support - Houdini announced Gaussian splatting integration, as did Octane Render, and plugins exist for Unity and Unreal. Michael expects consolidation here, with existing software platforms absorbing basic functionality rather than startups building standalone tools.

The question for companies building in this layer is whether they're creating features that eventually get absorbed or solving problems those platforms fundamentally can't address because they're too general-purpose. The opportunities exist in workflow-specific tools: automated quality checking for captures, format conversion pipelines, optimisation tools that reduce file sizes while preserving visual fidelity, and integration bridges between capture hardware and industry-standard software.

Vertical-specific solutions will win over horizontal platforms.

Michael emphasises there won't be one winner serving all industries. Each vertical has different technical requirements and workflow needs. Geospatial applications need outdoor capture at scale with georeferencing and integration with GIS systems. E-commerce needs controlled lighting, precise colour accuracy, and fast iteration for product catalog consistency. Architecture requires integration with BIM workflows, construction documentation standards, and compliance with building codes. Training applications need real-time manipulation, scenario editing capabilities, and assessment tracking.

Companies building horizontal platforms will lose to specialists who understand specific industry requirements deeply enough to build the right features, integrations, and go-to-market strategies that resonate with buyers who already have established workflows and purchasing processes.

Consumer and prosumer tools are emerging.

The fundamental accessibility of Gaussian Splatting - running on commodity hardware - guarantees a vibrant market for consumer and prosumer tools, even if the immediate ROI is less defined than in enterprise applications. The technical capability is already shifting the creator economy around 3D content.

Beyond creation, this accessibility enables the most personal use case: Memory Capture. Families can now scan rooms, objects, and moments, creating the spatial equivalent of photo albums. Apple's adoption of Gaussian Splatting for its Vision Pro platform validates this future, using the technology to power its highly immersive "Spatial Scenes" and the lifelike "Personas" used in virtual communication. The technical capability exists today to capture grandparents, childhood homes, and meaningful objects in full 3D, creating 3D versions of important moments that people will wish they had 50 years from now.

Apple’s “Spatial Scenes”

Building on this, Prosumer creators are finding workflows. Tools like PlayCanvas's Super Splat make basic editing accessible, and platforms like Arrival Space enable sharing captures with spatial audio and multi-user exploration - imagine touring a friend's apartment renovation or a wedding venue before booking.

The creator economy hasn't fully materialised yet, but the tools are readying the opportunity for platforms to distribute at scale.

The Ultimate Prize: Gaussian Splatting as the Foundation for Physical AI

While the commercial opportunities in AEC and training are significant, the ultimate, high-value application of radiance fields is much more than better graphics - but creating the foundational data layer required for Physical AI.

AI models need massive amounts of high-quality 3D data to truly understand spatial relationships and physical behaviour. You can't train robots to navigate spaces or manipulate objects without photorealistic 3D representations of how the world actually works.

NVIDIA's integration of Gaussian Splatting into Omniverse and Isaac Sim through their NuRec neural rendering API makes this concrete. They are using it to create physically accurate digital twins of factory floors and real-world spaces where robots are trained before deployment.

Their Physically Embodied Gaussians (real geometry, surfaces, and properties) approach combines particles for physics simulation with Gaussians for visual appearance, creating a continuously synchronised world model. This speed is key: Gaussian splatting enables real-time SLAM, allowing robots to build live internal models of environments as they navigate.

Research groups are already building on this foundation. SplaTAM and Gaussian-SLAM create dense, photorealistic maps of unknown environments in real-time as robots explore. GaussianGrasper allows robots to understand and interact with objects using language prompts and view-robust policies and Volvo uses Gaussian splatting for safety simulations and virtual world creation for autonomous vehicle development.

The pattern = wherever robots need to understand physical spaces, Gaussian splatting provides the training data.

GaussianGrasper lets a robot look at just a few pictures of a scene, quickly build a simple 3D version of it using “Gaussian splats,” understand which object you’re talking about (from your words), and then figure out the safest place to grab it.

Gaussian Splatting to enhance, train and validate Volvo’s driver safety systems.

This connects directly to FOV's thesis on physical AI and robotics.

The convergence of spatial computing, computer vision, and embodied AI all depend on the same underlying requirement: high-fidelity representations of the physical world. Radiance fields provide that foundation in ways traditional 3D modelling never could, because they capture not just geometry but the physical behaviour of light, materials, and spatial relationships that AI systems need to learn from.

Companies like Gauzilla Pro (web editor for 3D Gaussian Splatting) are already thinking beyond static visualisation toward how lifelike 3D becomes the foundation for AI systems that need to understand and interact with physical spaces - and their tools make those high-fidelity 3D maps simple to create.

This convergence is not linear; but a powerful feedback loop.

Better capture methods create superior training data, which produces more capable AI models, which enable smarter robotic systems, all demanding even higher fidelity spatial data. Radiance fields finally provide the bedrock for this loop.

This is the missing infrastructure that will unlock physical-world computing.

At FOV Ventures, we're investing in companies solving different pieces of this stack - from material capture to object digitisation to spatial AI applications. The companies that win will be the ones that understand both the technical capabilities and the specific industry problems where those capabilities create measurable value.

If you're building at the intersection of 3D capture, spatial computing, or physical AI, we'd like to hear from you. Reach out.

Thanks to Michael Rubloff of RadianceFields.com for sharing his insights on this rapidly evolving space. For anyone working with or researching radiance fields, his site remains the definitive resource for tracking developments in NeRFs, Gaussian splatting, and related technologies.